Home » Innovative Technologies » AI in the Music Industry: How It’s Changing the Game

AI in the music industry is making headlines, much like AI is doing across all other industries. In fact, according to CBS, artificial intelligence led to 3,900 job losses in May 2023.

In music, artists such as Sting are also struggling to deal with AI:

“The building blocks of music belong to us, to human beings. That’s going to be a battle we all have to fight in the next couple of years: Defending our human capital against AI. The tools are useful, but we have to be driving them.”

Denouncing new tech is nothing new for the music industry, of course. It’s always had a love/hate relationship with technology.

Musicians Embrace Tech

In artistic expression, technology tends to start revolutions and create new mediums or genres. When the camera was invented, painting was deemed dead. Painting is not dead but the camera brought about photography much like the ability to take a series of pictures led to video. In music, the electric guitar didn’t kill the acoustic guitar, it created a new style of music.

Artists, composers, producers, and engineers are always looking to push the limits in music. Here are four examples:

Les Paul

Wanting to push the sound a guitar could reproduce, Les Paul worked to make a guitar that could electrically reproduce sound and create a louder tone that was possible with acoustic instruments. Not stopping at the guitar, he went on to revolutionize the way music was recorded.

Charles Dodge

Charles Dodge used computer synthesis to recreate pieces of the early 1900s. In one of his best-known works, “Any Resemblance is Purely Coincidental,” Dodge recreates the voice of Enrico Caruso performing Pagliacci in 1907. The result is a haunting, lonely rendition of something we know that becomes new.

Grandmaster Flash

Hip hop had its origins in the poor neighborhoods of New York. Electric guitars and other instruments were pipe dreams, but kids had turntables and their parents’ records.

People like Grandmaster Flash perfected the art of finding the “break” in classic dance records. This was the part of the song with prominent drum beats that would get people dancing (or at least bobbing their heads).

Combining records and scratching them to stay on the breaks made for fantastic parties. These DJ sets led to rapping over the break beats and hip hop was born.

Depeche Mode

Depeche Mode, one of the biggest bands of the 80s, started out as a traditional rock band. As the group started to think about lugging around all the equipment to venues where they would perform, it became easier to take synthesizers instead.

They packed light and plugged right into a venue’s PA. The band evolved and never looked back, becoming an electronic group and creating some of the best music of the era. They changed the “cheesy” perception of synthesizer music in the era.

The Music Industry On the Other Hand

While artists pushed the limits of technology to create music, the industry resisted technological evolution in efforts to keep the status quo and reap most of the benefits (i.e., profits).

The industry has always believed in multiple purchases of the same music. It insisted on physical products to replace older products: pushing people to buy cassettes when records slowed, CDs when cassettes slowed, or records again when CDs slowed.

When physical music started to move to streaming, the industry looked to criminalize it rather than evolve until it could no longer push back. And each time artists tried to market themselves, record labels looked to take it away.

Enter AI

The discussion around artificial Intelligence (AI) and machine learning has existed in some capacity for several years. It’s been talked about as a job killer in both blue and white collar industries but creatives felt safe.

The belief was AI would never be able to replicate creative works because of its limitations as a machine. Creative works were not linear in development and often resulted from happy mistakes, illogical thinking, and altered mind states.

That all changed after an explosion of AI technology pushing the boundaries of what we thought possible. Today, the question is not if AI can write song lyrics and melodies. It’s a question of how much better it’s going to get.

Let’s explore AI in the music industry and just how it will change — and is already changing — the game.

Understanding AI in the Music Industry

The music industry includes how we search and find untapped talent; how that talent records, distributes, and monetizes the music; and how that music is stored and curated for listeners. Our habits for consuming music have changed just as much as the way musicians make music today has changed.

This is what makes the concept of machine learning both exciting and troubling. In many ways, AI already affects music today and has been for several years. In others, it’s only beginning to show its possibilities. Here is how AI functions in general:

The Algorithm

According to Tableau, an algorithm is the set of instructions a program will follow in its operation. In basic terms, “an AI algorithm is the programming that tells the computer how to learn to operate on its own.”

These AI algorithms begin to work as learning tools. It is what enables a program or application to understand the data it is provided.

Learning

AI programs learn by taking in massive amounts of data. This data is fed by programmers or by users themselves. The data can be fed in advance of the AI doing anything or the AI is creating the data as it interacts with users.

For example, chatGPT is a large language model that comes equipped with the knowledge it was given that includes data up to the year 2021.

Other times, the learning occurs as the AI recognizes patterns of a game and adapts to the users as they interact with it.

Application

Once an AI application has learned from data and user interaction, it is capable of interpreting a user’s prompts to create its own content.

One thing to remember is that the application is not only learning from the data but then reusing that data to create the content. This becomes most evident in images when certain faces or easily recognizable items look slightly off.

AI in Music Creation

Let’s begin in the part of the industry you thought was safe for a long time: the art of making music. Turns out it’s not so safe.

Music Composition

You can separate music writing into two basic sections: musical instruments and vocals. Yes, the human voice is an instrument itself but writing music lyrics is separate from writing musical notes and the way the two come together vary depending on the songwriter.

Lyrics

There are several AI applications targeting text. The most notable being ChatGPT, version 3. It was released in March of 2023 and from that moment, the world took notice.

As of this writing, the biggest knock on chatGPT and similar models like Google’s Bard, is their ability to create sources and statistics that don’t really exist. Failure to research what an AI has written means you’ll cite non-existing websites and other references.

When it comes to song lyrics, however, facts are not as essential. You only have to worry about lining up with a beat or melody. Several songwriters admit some songs don’t truly make sense if you take them at their word. They are a series of words that fit the song structure. A fact even stated by Paul McCarney in the Beatles, Michelle:

“Michelle, ma belle.

“Sont les mots qui vont très bien ensemble.”

That’s not to say chatGPT is the best option for writing song lyrics. It’s capable of giving you lyrics but not necessarily the best way to do it. Applications like LyricStudio are better suited for the lyric writing process.

With LyricStudio, you have the ability to build a song line by line on a specific topic. The fact your building rather than merely reading a finished work enables you as the songwriter to use the application for inspiration or guidance rather than blindly accepting the text completely.

Music

You may think words are one thing but how could a single machine recreate the works of the greats: Mozart, Beethoven, Listz, Springsteen, Dr. Dre. Herein lies the beauty of AI. Once your model has learned the musical pieces that exist today, it can use them to create “new” compositions that follow the same guidelines while being different.

Models like AIVA take in compositions and learn the various characteristics of each. It looks for patterns and uses them to interpret tone, timing, beats, musical speed, and much more.

Players

Perhaps an easier pill to swallow is Sony CSL’s AI bassist. Looking to add other band members into the mix, this bassist can learn how to play and adapt alongside you as a musician.

AI Tools for Musicians

Innovative musicians, perhaps more than people in other industries, are showing the world that AI can be a tool for people rather than a people replacement. The following tools are in use today and helping artists push their craft forward.

Google NSynth

Google has been developing NSynth for years with the goal of creating new sounds relying on a library of existing instruments. The application generates brand new sounds that are a blend of the instruments in its knowledge base.

Musenet

OpenAI isn’t stopping at text. The company developed Musenet, which analyzes MIDI files you feed it as prompts and essentially completes them to provide a finished musical piece.

Amper Music

Amper Music is an AI tool designed for creators to quickly generate original music for their content. According to Soundbox Tool, “Users input their desired parameters, such as genre, tempo, duration, and mood, and the AI engine composes an original piece of music that fits the specified criteria.”

Melodrive

Focused on gaming and social media music, “Melodrive’s AI technology is made to comprehend and react to a scene’s emotional content and produce music that heightens the audience’s emotional experience.” The music is adjusted for feel so that it can be used in various areas of a gaming experience.

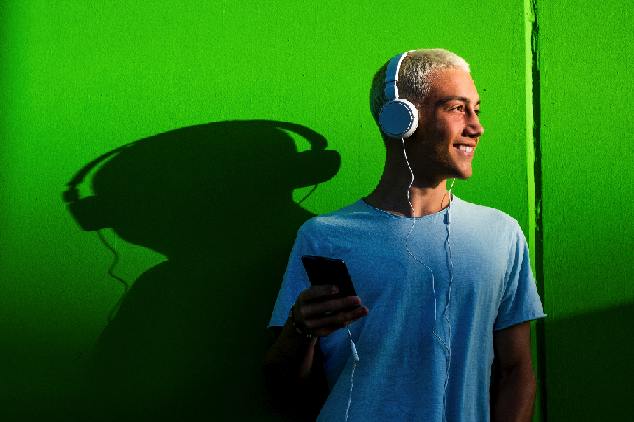

AI in Music Curation and Streaming

While artificial intelligence and machine learning are making headlines in 2023, there is one area of the music industry where machine learning has been working for some time now: Streaming.

Streaming applications like Apple Music, Spotify, and Amazon Music rely heavily on machine learning to get you the music you want without you even requesting it. Data collected over several years is used in algorithms to create the playlists and recommendations you see when you log in.

Spotify AI DJ

Back in March of this year, Spotify announced the Beta release of their AI DJ. This is a playlist created on the spot based on your listening history and it comes complete with an AI DJ voice that speaks to you every few songs to make sure you’re enjoying the content you’re hearing.

The playlist is updated in real time and was playing in the background during the writing of this piece, playing songs from Nirvana and Van Halen along with a few forgotten favorites and some new discoveries.

Brain.fm

What if you had a personal composer at your fingertips who could write and perform music based on what you needed at that part of the day. You may be looking to start the day with energy, concentrate on a high-priority task, or meditate. Turns out you do have one.

Per the AI Tools Directory, “Brain.fm’s patented artificial intelligence-powered music engine serves up unique tunes that help you concentrate, unwind, and sleep. The algorithm behind the music uses neuroscience research to create music that optimizes your brainwave activity for whatever your goal might be: optimal relaxation, focus, or sleep.”

AI in Production and Post-Production

Mixing and mastering are two processes that take up a lot of an engineer’s and producer’s time. While this has been accepted, it doesn’t mean it will always be this way. Several tools out now are designed to make this process more efficient and less stressful.

LANDR

One of the best examples of AI helping in post-production is LANDR. The application consists of a simple interface that processes your track and determines the best effects to utilize in order to polish the final product. You can A/B the results against the original before accepting the final master file.

Tuney

Tuney prides itself on taking a hybrid approach to mixing assistance. It uses “sounds from real artists to create soundtracks that can be edited and customized to any creative project.” Once you’re ready to dive into mixing, Tuney edits, adjusts, mixes, and remixes your music.

Izotope

Izotope’s plugins have been favored by producers for years. They’ve stepped into AI with a suite of AI assistants aimed at making mixing and mastering easier.

Neutron Mix Assistant

According to the Izotope website, after listening to and analyzing your music, Mix Assistant uses machine learning to suggest levels for your tracks. In short, you can use Mix Assistant to create your rough mix and use your time to tweak, personalize, and perfect the final mix.

Nectar Vocal Assistant

If Mix Assistant gets your rough mix started, Necta’s Vocal Assistant (VA) prepares your vocal settings. You set the vibe you want for your vocal, let VA give it a listen, and watch it create your vocal preset.

Neoverb Reverb Assistant

According to Nick Messitte writing for Izotope, Reverb Assistant is “a tool that listens to your audio and crafts pre- and post-reverb EQ curves for your music.” After the assistant listens to your track, it provides a starting point for your reverb that you can then adjust using Neoverb’s other tools like EQs and three-way blending pad.

RX Repair Assistant

Repair Assistant analyzes audio for problem sounds and removes them. These sounds include sibilants (esses), disruptive reverb, background noise, clipping, and clicks.

Ozone Master Assistant

Using only two starting settings, modern and vintage, Master Assistant takes your final mix and takes it through the mastering process. After it analyzes your audio, you have the ability to make some adjustments, including adding a reference track before accepting and letting the assistant create your final track.

With the full suite of assistants in use, you now have an assistant engineer to set things up for your sessions so you can focus on the most creative parts of the process.

AI in Music Marketing & Promotion

Despite the music industry having a unique product, this part of the process is much the same as other industries. Artists and their music, shows, engagements, and merch must find their way to their target audience in order to sell and create revenue.

AI Outreach

This process begins with segmentation. Using AI models, record labels can quickly read data about people interacting with their artists to create precise segments of people who will support each artist they wish to promote.

Bombshelter Entertainment advises a few different ways AI helps artists promote their music and events including AI chatbots in forums, AI-created social media posts, and analysis tools like Chartmetric to better understand your audience.

Predictive Analytics

AI is really about what a learning model can do after collecting data. Creation may get the notoriety but many businesses adopted machine learning in the age of big data for its ability to make predictions.

Could the music industry use an AI learning model to predict the next big thing? Absolutely! In fact, that’s already in place. Musiio can send notifications to participating record labels when its AI identifies users on the platform that meet the label’s specifications based on the content it has on the platform.

AI in Music: Is it Ethical? Is it Legal?

This question is not only being raised in the music industry but in all avenues of creative work. Anything created by AI is a direct result of copyrighted material the application has been given. The ethical and legal arguments of plagiarism and copyright infringement come up each time something of note is made exclusively by AI.

In August 2022, an AI piece created using Midjourney won the blue ribbon at the Colorado State Fair. Art purists pushed to have him disqualified and even shunned by the art community as a thief.

Similarly, an AI photo recently won at the World Photography Organization’s Sony World Photography Awards. Boris Eldagsen, the artist who created and submitted the work, declined the award in an effort to push the conversation of AI as its own creative medium.

Musically, the most notable occurrences surround using the likeness of artists. In April 2023, rapper Drake took to Instagram in opposition of a remixed version of Ice Spice’s song “Munch,” which included an AI-generated Drake vocal.

You can ask any artist and they will tell you that when they began, they wrote, acted, sang, rapped, or otherwise performed like artists they idolized. Only after years of emulation of various influences did that emulation develop into a unique voice.

That unique voice is a mixture of all the influences up to that point. The process seems a lot like what artificial intelligence models are doing, albeit at a much faster pace.

The legal problems are not cut and dry, either. Art has historically reinterpreted itself in new mediums or by new artists. Painters make replicas of famous works and sell them at a fraction of what a painting by the original artist would run.

Musicians take ideas and bend them into new concepts. The Beastie Boys’ album “Paul’s Boutique” famously uses hundreds of unlicensed samples and won its case of violation of copyright.

When you ask a learning model to provide a song in the style of Beethoven or Tool, you are not promoting it as a work by either artist. It is now something new inspired by each artist respectively. And if that’s the case, is it infringement?

Legal Considerations

Who Owns AI Compositions?

One issue with AI compositions is their ability to be copyrighted. The U.S. Copyright Act was created to secure rights for “authors,” and authors are limited to humans according to the courts. Animals, forces of nature, and machines cannot be authors.

The United States Copyright Office has rejected AI-advocate Steven Thaler applications for AI-generated work three separate times. The court cited “the artwork was not ‘created with contribution from a human author’ and thus failed to meet the human authorship requirement.”

Compensating Copyright Owners

Much like sampling, paying for the use of copyrighted material should apply to AI-generated music. Copyrighted music includes metadata that should make this an easy process in situations where a handful of real pieces or voices are used to create something new.

The payout can be different when a composition is not specifically replicating an artist’s style or song but it is still possible as long as the AI platform holds onto the metadata of the source material. The trouble is AI models take in so much data as source material and then erase or otherwise change that data in the learning process.

Using Likeness

While most legal considerations around AI in music are complicated, one that seems easy to understand is that of generating music meant to replicate specific artists.

When “Heart on My Sleeve” hit TikTok as a Drake and The Weeknd song produced by a mysterious producer known as Ghostwriter, the track went viral. The vocals used AI to sound like Drake and The Weeknd and the track was released without their knowledge of it even existing.

When TikTok, Spotify, and other platforms pulled the song, Universal Music Group put out a public statement that said the following: “These instances demonstrate why platforms have a fundamental legal and ethical responsibility to prevent the use of their services in ways that harm artists. We’re encouraged by the engagement of our platform partners on these issues—as they recognize they need to be part of the solution.”

We Need an AI Classification

Right now, music generated with AI can be created and immediately released on social media platforms like TikTok and YouTube. Producers can put it up on their Spotify or Apple Music catalogs. And all this without letting the public know AI was involved.

This needs to change to protect both artists and the public. Artists have a right to protect their likeness and copyrighted material. Equally, consumers should know when they are listening to an AI-generated voice or a human being’s performance in a studio.

Final Words: The Future of AI in the Music Industry

Creative artificial intelligence was once thought impossible but an explosion in technological evolution has changed that belief. It’s now not only possible but already happening.

We’ve seen artists push against it but if history is any indication, artists and companies that stand in the way of progress, may find themselves out of touch very quickly. The next wave of artists is watching things develop and the next generation of musicians will make music with AI, distribute it as NFTs, and perform in the metaverse.

I, for one, prefer to stand on the side of evolution than extinction. There are legal considerations that must be addressed but you can’t simply ban artificial intelligence and machine learning. Used properly, AI is a tool designed to help artists, producers, engineers, and countless others to help in their process or even create a new artistic medium.